You can't call yourself an SEO Technician if you don't use the Indexing Report in Google Search Console.

It is an invaluable tool for understanding:

- Which URLs have been crawled and indexed by Google and which have not.

- And, more importantly, why did the search engine make this choice regarding the URL.

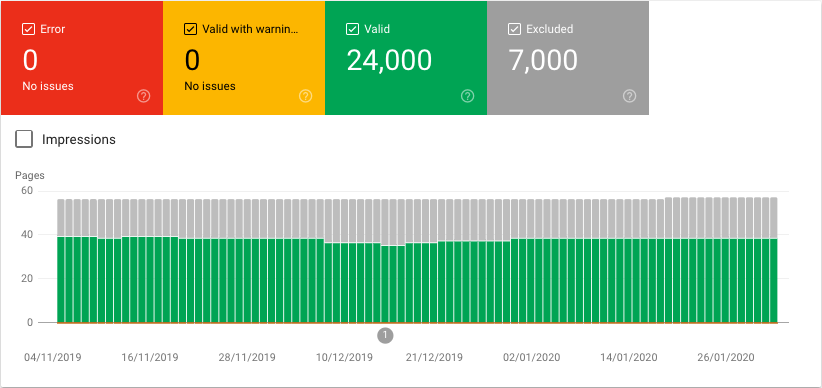

The report seems relatively simple with its traffic light color scheme.

- Red (Error) : Stop! Pages are not indexed.

- Yellow (valid with warnings) : If you have free time, stop, otherwise step on the gas and go! Pages can be indexed.

- Green (really) : everything is fine. Pages are indexed.

The problem is that there is a big gray area (excluded) .

And if you go into details, it seems that the rules of the road are written in a foreign language. Google.

So, today we will translate the status types in the indexing report into elements of SEO actions that you should take to improve indexing and increase organic performance.

Here for a specific violation? Feel free to use quick links:

Impact on SEO: Eliminate these violations as a priority.

- Found - not currently indexed

- Crawled - not currently indexed

- Duplicate without canonical version selected by user

- Duplicate submitted URL not selected as canonical

- Duplicate, Google chose canonical other than custom

- Submitted URL not found (404)

- redirect error

- Server Error (5xx)

- Scan Anomaly

- Indexed but blocked by robots.txt

Additional Considerations Required: These may or may not require action, depending on your SEO strategy.

- Indexed, not submitted to sitemap

- Blocked by robots.txt file

- Submitted URL blocked by robots.txt file

- Submitted URL is marked as noindex

- Submitted URL returns unauthorized request (401)

- Submitted URL has problems crawling

- Submitted URL looks like Soft 404

- Soft 404

Natural Status: No action required.

- Sent and indexed

- Alternate page with correct canonical tag

- Excluded by noindex tag

- Page with redirect

- Not found (404)

- Blocked by page removal tool

Issues Affecting SEO in the Indexing Report

Don't just focus on fixing bugs. Often the bigger successes in SEO are actually buried in a gray area of exclusion.

Below are the index coverage report issues that are really important for SEO, listed in order of priority, so you know what to look out for first.

Found - not currently indexed

Cause: The URL is known to Google, often from links or an XML sitemap, and is in the crawl queue, but Googlebot hasn't had time to crawl it yet. This indicates a problem with the scan budget.

How to fix it: If it's just a few pages, start a manual crawl by submitting the URLs to Google Search Console.

If there are many, take the time to fix the website architecture long-term (including the URL structure, site taxonomy, and internal links) to resolve crawl budget issues at their source.

Crawled - not currently indexed

Reason. Googlebot crawled the URL and found that the content was not worthy of being indexed. This is most often due to quality issues such as thin content, outdated content, doorways or user generated spam. If the content is decent but not indexed, you're probably rendering confused.

How to fix it: Review the content of the page.

If you understand why Googlebot found the content of the page not valuable enough to be indexed, ask yourself the second question. Should this page exist on my site?

If the response is negative, 301 or 410 URLs. If so, keep adding the noindex tag until the content issue is resolved. Or, if it's a parameter-based URL, you can prevent the page from being crawled with optimal parameter handling.

If the content is of acceptable quality, check what is displayed without JavaScript. Google can index JavaScript-generated content, but this is a more complex process than HTML because there are two waves of indexing when JavaScript is involved.

The first wave indexes the page based on the HTML source code from the server. This is what you see when you right-click and view the page's source code.

The second index is based on the DOM, which includes both HTML and client-side rendered JavaScript. This is what you see when you right click and check.

The problem is that the second wave of indexing is being delayed until Google has render resources available. This means that JavaScript-based content takes longer to index than HTML-only content. From several days to several weeks from the moment of scanning.

To avoid indexing delays, use server-side rendering to ensure that all required content is present in the original HTML. This should include your hero SEO elements such as page titles, titles, canonical elements, structured data, and of course your main content and links.

Duplicate without user-selected canonical

Reason. Google considers that the page contains duplicate content, but does not have a clear canonical designation. Google decided that this page should not be canonical, and therefore excluded it from the index.

How to fix it: Explicitly mark the correct canonical, using rel=canonical links, for every crawlable URL on your website. You can understand which page Google has chosen as its canonical page by checking the URL in the Google Search Console.

Duplicate submitted URL is not selected as canonical

Reason: Same as above, except that you explicitly requested that this URL be indexed, for example by submitting it to an XML sitemap.

How to fix it: Explicitly mark the correct canonical, using rel=canonical links, for every crawlable URL on your website, and be sure to only include canonical pages in your XML sitemap.

Duplicate, Google chose canonical different from custom

Reason. The page has a rel=canonical link, but Google disagrees with this suggestion and has chosen a different URL to be indexed as the canonical.

How to fix it: Check the URL to see Google's chosen canonical URL. If you agree with Google, change the rel=canonical link. Otherwise, work on your website architecture to reduce duplicate content and send stronger ranking signals to the page you want to be canonical.

Submitted URL not found (404)

Cause: The URL you submitted, probably via your XML sitemap, does not exist.

How to fix it: Create a URL or remove it from the XML sitemap. You can systematically avoid this error by following the best practices for creating dynamic XML sitemaps.

redirect error

Reason: Googlebot had a redirect issue. This is most commonly caused by redirect chains of five or more URLs, redirect loops, an empty URL, or an overly long URL.

How to fix it: Use a debug tool like Lighthouse or a status code tool like httpstatus.io to understand what is breaking the redirect and therefore how to solve it.

Make sure your 301 redirects always point directly to the final destination, even if that means editing old redirects.

Server Error (5xx)

Cause: Servers return an HTTP 500 response code (also known as an internal server error) when they fail to load a page. This can be caused by broader server issues, but is most commonly caused by a momentary server outage that prevents Googlebot from crawling the page.

How to fix it: Don't worry if it's "once upon a blue moon". The error will disappear by itself after a while. If the page is important, you can call Googlebot on the URL by requesting indexing as part of the URL check. If the error persists, talk to your systems engineer/tech lead/hosting company to improve your server infrastructure.

Scan Anomaly

Reason: Something prevented the URL from being crawled, but even Google doesn't know what it is.

How to fix it: Load the page with the URL Inspection Tool to see if any 4xx or 5xx level response codes are returned. If that doesn't give any clues, please send the URLs to your development team.

Indexed but blocked by Robots.Txt

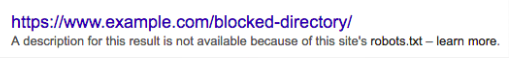

Reason. Think of robots.txt as the digital equivalent of a no entry sign on an unlocked door. Googlebot obeys these instructions, but does so in accordance with the letter of the law, not the spirit.

Thus, you may have pages that are specifically prohibited in the robots.txt file that will show up in search results. Because if the blocked page has other strong ranking signals, such as links, Google may consider it relevant for indexing.

Even though the page has not been crawled. But since the content of this URL is unknown to Google, the search result looks something like this.

How to fix it: To permanently block a page from showing up in SERPs, don't use robots.txt. You need to use the noindex tag or prevent anonymous access to the page using auth.

Be aware that URLs with a noindex tag will also be crawled less often and if they are present for a long time, this will eventually cause Google to not follow the page links as well, which means they will not add these links to the scan queue and ranking signals. will not be passed on to linked pages.

More thought needed

Many problems with the Google Search Console index coverage report are caused by conflicting directives.

It's not that one is right and the other is wrong. You just need to be clear about your goal and make sure that all site signals are aligned with that goal.

Indexed, not submitted to Sitemap

Reason: The URL was discovered by Google, probably via a link, and indexed. But it was not submitted to the XML sitemap.

What to do: If the URLs are SEO relevant, add them to the XML sitemap. This will ensure that new content or updates to existing content are quickly indexed.

Otherwise, consider if you want to index URLs. URLs are judged on more than just their own merit. Every page indexed by Google affects how quality algorithms evaluate a domain's reputation.

If pages are indexed but not represented in the sitemap, this is often a sign that the site is suffering from index bloat when an excessive number of low-value pages have entered the index.

This is usually caused by auto-generated pages such as filter combinations, archive pages, tag pages, user profiles, pagination, or fraudulent options. Index bloat prevents a domain from ranking all of its URLs.

Blocked by Robots.Txt

Reason: Googlebot won't crawl the URL because it's blocked by the robots.txt file. But this does not mean that the page will not be indexed by Google. When you start seeing "indexed but blocked by robots.txt" warnings in the indexing report.

What to do: to prevent the page from being indexed by Google, remove the robots.txt block and use the noindex directive.

Submitted URL blocked by Robots.Txt

Cause: The URL you submitted, probably via an XML sitemap, is also blocked by your robots.txt file.

What to do: Remove the URL from the XML sitemap if you don't want it to be crawled and indexed, or the block rule from the robots.txt file if you do. If you are using a hosting service that does not allow you to change this file, change the web hosts.

Submitted URL is marked as "Noindex"

Reason. The URL you submitted, probably via an XML sitemap, is marked as noindex either with meta robots tags or in X-Robots HTTP header tags.

What to do: Remove the URL from the XML sitemap if you don't want it to be crawled and indexed, or remove the noindex directive if you prefer.

Submitted URL returns unauthorized request (401)

Reason: Google is not authorized to crawl the URL you submitted, such as password-protected pages.

What to do: If there is no reason to protect content from indexing, remove the authorization requirement. Otherwise, remove the URL from the XML sitemap.

Submitted URL has a crawling issue

Cause: Something is causing a crawling issue, but even Google can't name it.

What to do: Try to debug the page with the URL Inspection tool. Check page load times, blocked resources, and unnecessary JavaScript code.

If that doesn't work, use the old-fashioned way of downloading a URL to your mobile phone and see what happens on the page and in the code.

Submitted URL looks like a soft 404

Reason: Google considered the URL you submitted, probably via your XML sitemap, to be a soft 404, meaning the server responds with a 200 success code, but the page:

- Does not exist.

- Contains little or no content (that is, thin content), such as pages with empty categories.

- Has a redirect to an irrelevant target URL, such as the home page.

What to do: If the page really doesn't exist and was deliberately removed, return 410 for the fastest possible de-indexing. Be sure to display a custom "not found" page to the user. If the other URL doesn't have similar content, then implement a 301 redirect to send ranking signals.

If there is a lot of content on the page, make sure Google can display all of that content. If it really suffers from thin content, if the page has no reason to exist, 410 or 301 if it does, remove it from your XML sitemap to avoid getting Google's attention, add a noindex tag and work on a longer-term solution for filling the page with valuable content.

If there is a redirect to an irrelevant page, change it to the relevant page, or if that's not possible, to the 410 page.

Soft 404

Reason: Same as above, but you didn't specifically request that the page be indexed.

What to do . As in the previous case, either show Google more content, 301 or 410 respectively.

Natural statuses in the index coverage report

The goal is not for every URL on your site to be indexed, in other words, valid, although this number should steadily increase as your site grows.

The goal is to index the canonical version of SEO-relevant pages.

It's not only natural, but useful for SEO to have a few pages marked as excluded in the indexing report.

This shows that you remember that Google has evaluated your domain's reputation based on all indexed pages and has taken appropriate action to exclude pages that should exist on your website but should not count towards Google's view of your content.

Sent and indexed

Reason: You submitted a page via XML sitemap, API, or manually to Google Search Console and Google indexed it.

No fix required: unless you want these URLs to be in the index.

Alternate page with correct canonical tag

Reason: Google successfully rendered the rel=canonical tag.

No fix required: The page already specifies its canonical value correctly. There is nothing else to do.

Excluded by Noindex tag

Reason: Google crawled the page and took into account the noindex tag.

No Fix Needed: If you don't want these URLs to be indexed, in which case remove the noindex directive.

Page with redirect

Reason: Your 301 or 302 redirect was successfully crawled by Google. The destination URL has been added to the crawl queue and the source URL has been removed from the index.

Ranking signals without dilution will be sent after Google crawls the target URL and confirms that the target URL has similar content.

No Fix Needed: This exception will decrease naturally as redirects are processed.

Not found (404)

Cause: Google found the URL not through an XML sitemap, but through a link from another website. When crawled, the page returned a 404 status code. As a result, Googlebot will crawl the URL less frequently over time.

No Fix Needed: If the page really doesn't exist because it was intentionally removed, there's nothing wrong with returning a 404. There's no Google penalty for accumulating 404 codes. This is a myth.

But that doesn't mean it's always best practice. If the URL had any ranking signals, they are lost in the 404 void. So, if you have another page with similar content, consider going to a 301 redirect.

Blocked by page removal tool

Reason: A URL removal request was sent to Google Search Console.

No Fix Required: Delete request expires after 90 days. After this period, Google may re-index the page.

Summarize

In general, prevention is better than cure. Well thought out website architecture and bots often result in a clean and understandable Google Search Console index coverage report.

But since most of us have inherited the work of others rather than building from scratch, it's an invaluable tool to help you focus where it's needed most.

Be sure to check the report each month to track Google's progress in crawling and indexing your site and to document the impact of SEO changes.

Additional resources:

- How Search Engines Crawl and Index: Everything You Need to Know

- Your Indexed Pages Are Crashing - 5 Possible Reasons

- 11 SEO Tips and Tricks to Improve Indexing

No comments:

Post a Comment

Note: only a member of this blog may post a comment.